Azure Spring Clean 2024

Hello, and welcome to Creating The Cloud Pirate!

This is article is jointly written by Daniel McLoughlin and Tomasz Hamerla, and serves as our contribution to Azure Spring Clean 2024.

Azure Spring Clean is a community-driven annual event run by Microsoft MVPs Joe Carlyle and Thomas Thornton. It aims to promote well-managed Azure tenants and features articles, podcasts and videos from various Azure experts and enthusiasts who want to share their knowledge and experience with Azure Management.

In this article, Daniel and Tomasz will talk about The Cloud Pirate project, how it runs under the hood (on Azure), and how it is managed to promote collaboration.

Meet the authors

Daniel McLoughlin

Daniel is a Microsoft Most Valuable Professional (MVP) in Azure, and a Microsoft Certified Trainer (MCT). He works as a Microsoft Partner Technology Strategist in the UK and Ireland.

Daniel is the creator of The Cloud Pirate project, and is also known for Azure Lean Coffee. He lives in Ripon, a small city in North Yorkshire, England, with his wife and two sons.

Website: daniel.mcloughlin.cloud

Tomasz Hamerla

Tomasz is a cloud engineer well-versed in Azure and AWS; focused on data/AI/legal.

Website: tomaszhamerla.com

What is The Cloud Pirate?

In a nutshell, The Cloud Pirate is an automated content aggregator, running on Azure PaaS, and backed by Azure OpenAI.

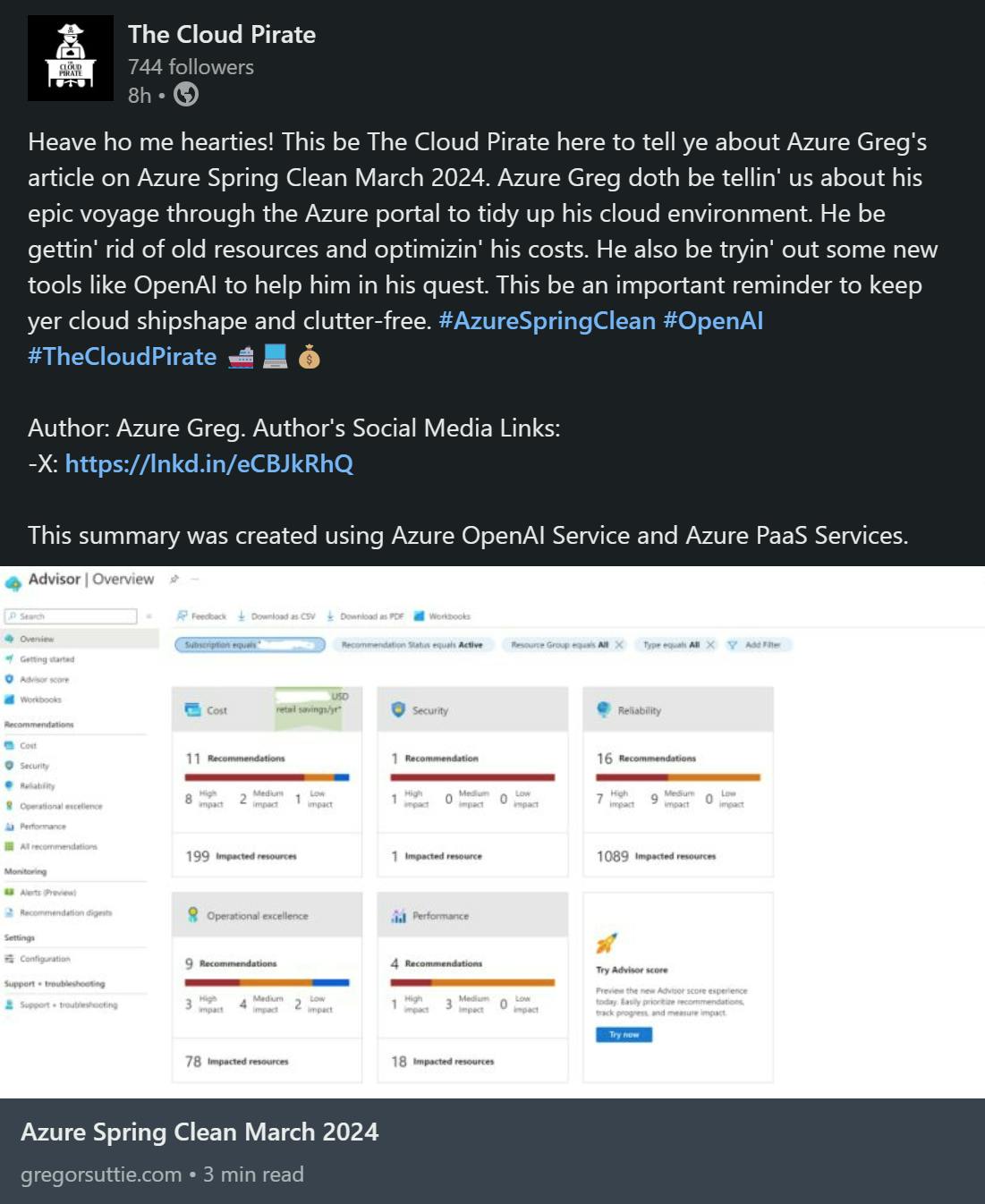

It captures new content created by the cloud tech community, summarises it (using AI), and redistributes it on numerous social media platforms. This helps the original author reach a wider audience, and serves the wider community by collating cloud tech community content all in one place.

The Cloud Pirate started life as a Twitter bot, called Azure Pirate, which came to an end when Elon Musk introduced costs for the Twitter APIs, making the project financially unviable. After a short break, the project was relaunched with an expanded scope, covering all Cloud, not just Azure, and on LinkedIn, not Twitter (now called X).

The focal point of The Cloud Pirate is the LinkedIn page, which you can find here. By following the page, you can get access to the ever updating feed of content shared by The Cloud Pirate.

The bot also publishes to the Mastodon and Bluesky social media platforms, thanks to the valiant efforts of Tomasz.

In addition to the social media platforms, there is also a weekly newsletter called The Cloud Pirate - Weekly Haul, which you can subscribe to the newsletter by following this link.

For a more in-depth overview of The Cloud Pirate - please check out this article.

Collaboration

The Cloud Pirate is a project open to collaboration. It was initially created by Daniel, which went as far as the integration with LinkedIn. Tomasz came on-board and helped extend the project to both Mastodon and Bluesky.

From an Azure perspective, the resources powering the project run on Dan's personal Azure tenant.

Tomasz was invited to the tenant as a guest account in Entra ID, and that guest account was given suitable RBAC roles on the resource groups or specific resources that Tomasz needed access to.

How does it run?

Overview

If we had to sum up how this project runs in one big statement, it would be:

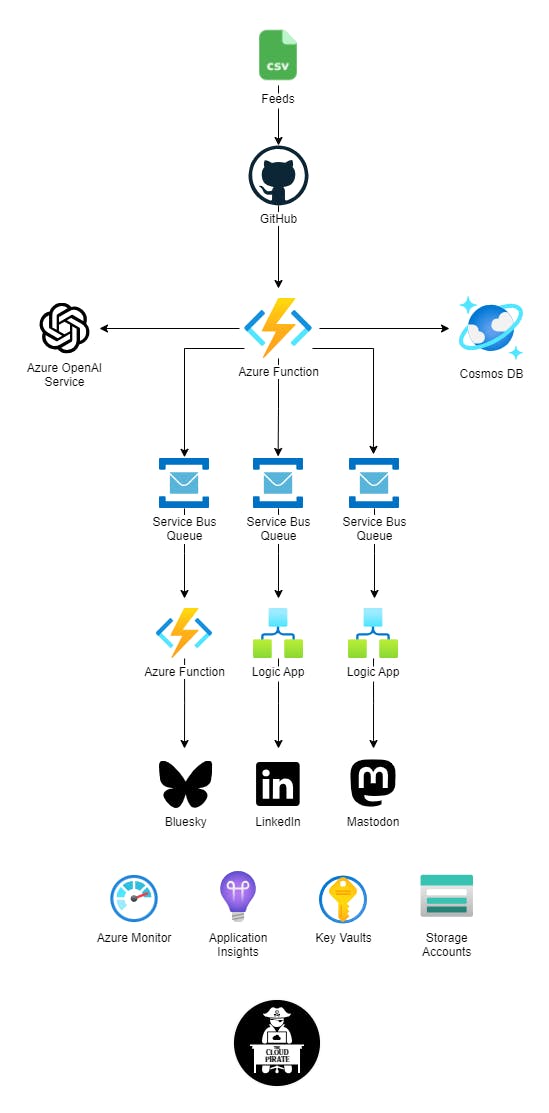

"An Azure Function App running PowerShell that checks a big list of RSS feeds for new content. If new content is found, and if it doesn't already exist as a database record (to prevent duplication), the post is summarised using AI, and added to messaging queues. Azure Logic Apps and another Azure Function periodically check these queues for messages, and if found, post the messages on social media".

Phew! 😂

The diagram below should make that easier to digest and understand:

RSS Feeds

At the heart of this project is the carefully crafted list of RSS feeds, in the form of a CSV file hosted on a public GitHub repo, found here. Entries are managed automatically through Git Pull Requests, or manually via a Microsoft Form, found here. Microsoft Forms were used as not to discount anyone not familiar with Git Pull Requests. Plus, it's free!

Azure Function - Feed Reader

The Azure Function acting as the RSS feed reader is the biggie. It runs a timer triggered series of PowerShell scripts, and does all the heavy lifting, such as iterating over every RSS feed sequentially, doing lookup and new entries into Cosmos DB, throwing prompts against an Azure OpenAI instance, and posting messaging onto Service Bus Queues.

From a PowerShell perspective, to interact with Cosmos DB we use the CosmosDB PowerShell Module by PlagueHO, and to interact with Azure OpenAI, we use PSOpenAI by mkht. Calls to the Service Bus Queues are via the REST API.

The PowerShell code is stored in a private GitHub repo (for fear of judgement 🫢), and a GitHub Action is triggered on each commit, deploying the code into the Azure Function. Code is tested locally using Azure Function Core Tools.

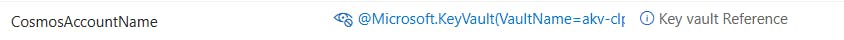

The Azure Function uses a System Assigned Managed Identity and Role Based Access Control (RBAC) roles to interact with the other Azure resources where possible.

Connection strings and other secrets are stored in an Azure Key Vault, and are injected into the PowerShell runtime by linking them through the Azure Function configuration, such as the below:

Cosmos DB

We use Cosmos DB in order to prevent duplicating social media posts.

The Azure Function timer runs every hour, but looks for posts (per RSS feed) published within a 48-hour window. The reason for this is that some people publish their content according to a schedule, which can skew the publish dates/times and mess up the RSS feed.

Getting the code to look back over a longer period ensures posts aren't missed. This introduces complexities though - how can the code tell which posts have been processed already, and which have not? This is where the need for database lookups come in. Every processed post has its own database record, and the code looks for this before proceeding, thus preventing duplication.

Cosmos DB was chosen as a versatile and schema-less database, and we initially used the free-tier until the requirements surpassed what that could provide. Mostly, it was chosen as it's Dan's go-to choice of DB!

Azure OpenAI Service

Back in the days of the Azure Pirate bot posting to Twitter, the message construction was all handled in PowerShell through string manipulation. With the introduction of the Azure OpenAI Service, the PowerShell now passes a carefully constructed prompt, including the original post author's name, the post title and URL. It also passes in the post type, be that a blog post, YouTube video, or podcast.

If the media type is a blog post, the prompt requests a short summary of the post, including the creation of social media hashtags. Oh, and this is where we also the responses to be in a silly pirate voice! 😂🏴☠️

YouTube and podcast content cannot be analysed by this model, so with those, the prompt is to introduce the post based on the author and title, not summarise it.

Example:

Service Bus Queues

The Azure Function processing the RSS feeds runs hourly, and can identify tens of new posts in a single pass. Rather than attempting to post those to social media in batch (thus spamming all the followers, or causing throttling issues), Service Bus Queues were introduced as a mechanism of queuing up the posts. E.g. rather than trying to post 10 summaries at a time to LinkedIn, those 10 post summaries are added as individual messages to a dedicated Service Bus Queue.

The Azure PaaS resources used to post those messages to social media, periodically read from the queues according to timers, meaning that the social media posts are drip-fed incrementally, rather than being posted in bulk.

Multiple Service Bus Queues are in use, split by social media platform (currently LinkedIn, Mastodon and Bluesky). We chose this approach over multiple queues within a single Service Bus, or a Service Bus topic, as it made life easier for collaboration between multiple individuals working on the project i.e. it was easer to create dedicated resources and control access via RBAC, that it was to handle access within a single resource.

LinkedIn integration

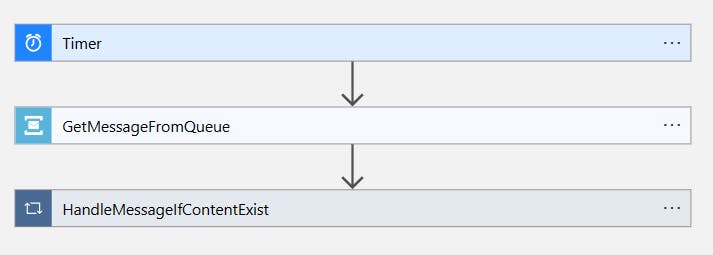

Dan (rather controversially) chose to use a Logic App to post to LinkedIn, rather than his favoured and beloved Azure Functions. Being totally honest here, he did this because he was lazy, and didn't want to read LinkedIn's API docs! This is where the beauty of Logic Apps comes in. Their integrations with 3rd party platforms such as LinkedIn (and many, may others) mean that connecting Azure resources to external systems is seamless - from a ClickOps perspective anyway.

The Logic App is set to run according to a timer. When it executes, it checks for messages in a dedicated Service Bus Queue.

If a new message is found, logic exists to pull the message (as JSON), parse it into individual components (such as post title, commentary, URL and optional thumbnail image), post the message to LinkedIn, and then complete the message, removing it from the Service Bus Queue.

If no message is found, the flow completes, and if an error is thrown for whatever reason, any pulled message is not completed and this remains in the queue, ready for the next run.

Mastodon integration (by Tomasz)

Governance: instance challenges

Logic Apps: simple manual request

Governance

For Mastodon the key issue was: instance governance. Mastodon is decentralized, there are many servers to choose from - and each can have their own rules! Complex, huh? Here are the key challenges I faced there while developing the bot:

discovery: are tech folks on the instance? or it it easily discoverable to tech folks?

instance limitations for bots: are there extra limita on the instance? posts limit? discovery limits?

admin contact: is it easy to contact the admin and negotiate? or am I stuck with the defaults (of autoreply)?

For all of those hachyderm turned out to be a good fit. I already hosted a bot there and while there are limits - the contact with admins has been excellent and limits can be expanded. Shout-out to https://hachyderm.io/@quintessence the instance admin!

Important notice. Be aware that the botsinspace instance limits visibility of posts - if you choose this instance.

Logic Apps

For making the API request itself - I chose Logic Apps, because it required less work than writing the code. The process of making the API request is quite straightforward - and well-described on Jani Karhunen blog!

Memorial

To honor Kris Nova, the founder of Hashyderm - I encourage to donate to: https://translifeline.org/donate

Bluesky integration (by Tomasz)

JavaScript: native ecosystem support

Azure programming model -> solved by downgrading to v1 = Gotcha: Links not autoexpanded

Choosing the tooling

For Bluesky, I decided to go with writing the code. The platform is targeted at developers - and the request process is too complicated to do by hand on Logic Apps. I also chose JavaScript as the programming language, because it had native platform support.

Azure programming model

Unfortunately, it turned out the newest Azure Programming Model was buggy - and the issue wasn't resolved to this day. To work around it, I chose v1 programming model

See those threads: https://www.reddit.com/r/AZURE/comments/16uqe6n/azure_function_no_job_functions_found_try_making/

https://github.com/Azure/azure-functions-python-worker/issues/1262

Links not autoexpanded

One surprising platform gotcha I came across was: the delibarate platform design decision to not expand the links automatically and check them like on Twitter/X. Thus, I worked it around by adding the appropriate code.

Opensourcing the code

Finally: the code was published on github https://github.com/thamerla/thecloudpirate-bluesky

Monitoring

Application Insights/Azure Monitor (with bespoke KQL queries) are used to look out for failed runs and code-level errors, which, if found, trigger emails via Action Groups. This is applied to all Azure Functions and Logic Apps.

Areas of improvement

This project is far from perfect and there is a lot that could be done to align it better to Microsoft best-practice, such as the below:

Parallel processing of RSS feeds.

Private networking, such as Private Endpoints and Service Endpoints.

End-to-end deployment automation (currently only partial via Terraform and GitHub Actions).

Resource tagging, and resource locks.

Lot's more!

By not having the likes of the above in place, we run security risks, continuity risks and governance risks.

This makes it sound pretty shoddy! It's important to note though that this is a community facing side-project, not a critical production workload. In scenarios like this, just becuase you can, does not always mean you should. It's a trade-off between risk and convenience.

It's also important to note that we know what best practice should be with this, and that we aknowledge what the risks are in relation to the type of project this is - and there's a lot to be said for that.

Conclusion

The Cloud Pirate is a community-driven project that leverages Azure PaaS and OpenAI to aggregate, summarise and share cloud tech content. It runs on a combination of Azure Function Apps, Logic Apps, Cosmos DB, Service Bus Queues, and Azure OpenAI Service, and integrates with various social media platforms such as LinkedIn, Mastodon, and Bluesky. The project is open to collaboration and welcomes contributions from anyone interested.

The Cloud Pirate demonstrates how Azure can be used to create innovative and engaging solutions for the cloud community, by the cloud community.

Get Involved

The Cloud Pirate project is open to collaboration. If you have ideas on functionality or areas of improvement, or you would like to integrate it into your own projects somehow, then please reach out to me on Twitter, here.

As an example, The Cloud Pirate project was linked to the AzureFeeds project, run by fellow MVP Luke Murray. A weekly extract of Azure specific Cloud Pirate posts are included in the AzureFeeds weekly newsletter under the community section. You can sign up to that newsletter, here.

If you'd like to be added in as a data source, i.e. add your blog, podcast, YouTube etc. into the aggregator, you can either complete this form, or if you're familiar with Git and GitHub, feel free to create a Pull Request on the feeds repo, found here.